irgNET – LAB 4.0 U2

Welcome to the 2nd Update, which is actually quite large, I have added the Microserver Gen8 to the datacenter again because I still want to have a business separation between the production environment and the backup environment.

From this update on we differentiate the 3 node cluster as Datacenter 1 and the standalone Microserver as Datacenter 2. I installed VMware ESXi 7.0 U1 on the microserver and mirgrated the Veeam backup appliance via VMware vMotion. I also connected the external 2TB USB hard drive to the microserver.

In the software stack I have been working a bit with Ansible the open source automation solution from Red Hat, here I wrote a playbook together with my colleague, which selects a VM template from the vCenter and deploys a VM. More about this may follow on the next updates!

For more information about Ansible visit: https://www.ansible.com/

But that’s beside the point now, from this update on my mentor Marc Huppert got involved in my datacenter and changed quite a few things.

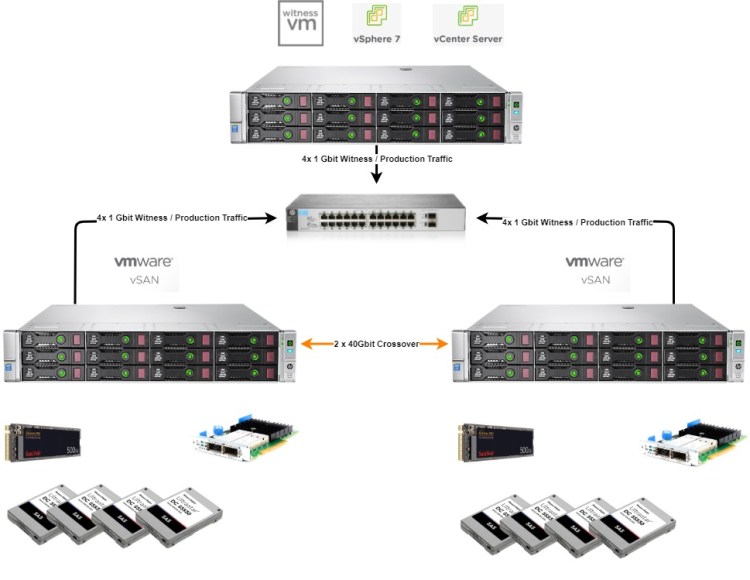

As you can see in the picture, a new component has been added to my VMware vSphere universe – vSAN:

Lets take a look at my architecture first, I have the 2 node cluster design in place, which is 2 vSAN nodes and 1 standalone node running the VMware Witness appliance (see below for more information).

The vSAN nodes were each equipped with

- 2x Intel E5-2630 v2 (supported)

- 192GB Ram

- 4x 800GB SAS SSDs (Capacity Devices) and

- 1x 512GB M2 SSD (Cache Devices)

From the Capacity SSDs and the Cache M2 SSD, a disk group is configured per node, which is used to provision a vSAN datastore. In my case we have the Failure Protection “FTT1 – RAID1 Two data copies” and therefore the following storage capacity results:

Useable capacity is approx. 3 TB

Raw capacity is approx. 6 TB

Furthermore the vSAN nodes talk to each other with 2x 40 Gbit crossover for a lightning fast FTT-1 protection and VMware vMotion, if it is automatically triggered by VMware DRS. All 3 nodes communicate via a 24 port 1 Gbit switch and the hole thing looks like this:

With this hyperconverged like switch, I changed both my NFS storage and local storage to VMware vSAN.

Before we take a rough look at the functionality of VMware vSAN, I would like to talk about 3 possible deployments:

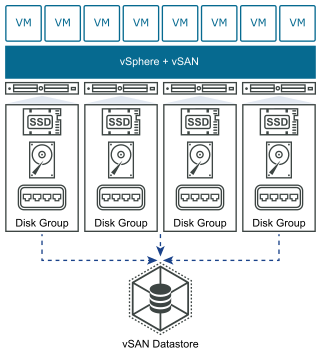

Standard vSAN Cluster

A standard vSAN cluster consists of a minimum of three hosts. Typically, all hosts in a standard vSAN cluster reside at the same location, and are connected on the same Layer 2 network. All-flash configurations require 10 Gb network connections, and this also is recommended for hybrid configurations.

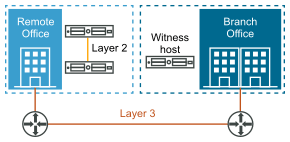

2 Node vSAN Cluster

Two-node vSAN clusters are often used for remote office/branch office environments, typically running a small number of workloads that require high availability. A two-node vSAN cluster consists of two hosts at the same location, connected to the same network switch or directly connected. You can configure a two-node vSAN cluster that uses a third host as a witness, which can be located remotely from the branch office. Usually the witness resides at the main site, along with the vCenter Server.

Stretched vSAN Cluster

A vSAN stretched cluster provides resiliency against the loss of an entire site. The hosts in a stretched cluster are distributed evenly across two sites. The two sites must have a network latency of no more than five milliseconds (5 ms). A vSAN witness host resides at a third site to provide the witness function. The witness also acts as tie-breaker in scenarios where a network partition occurs between the two data sites. Only metadata such as witness components is stored on the witness.

VMware vSAN Functionality

One way to understand vSANs is to compare them with hyperconvergence. Traditional IT architectures use separate components for compute (CPU and RAM), storage and networking. In contrast, hyperconvergence consolidates compute, storage and networking into a single, streamlined architecture managed via software.

Like hyperconverged infrastructures (HCIs), vSANs also run on x86 servers. When installed, vSANs eliminate the need for dedicated storage hardware within the enterprise’s IT infrastructure.

The software component of a vSAN provides a shared pool of storage that the virtual machines (VMs) can access on-demand.

Typically, the vSAN architecture has two or more Nodes that provide shared storage necessary for services such as high availability (HA) and distributed resource sharing (DRS).

VMware High Availability: https://www.vmware.com/products/vsphere/high-availability.html

VMware DRS: https://www.vmware.com/products/vsphere/drs-dpm.html

For more information about VMware vSAN please visit: https://www.vmware.com/products/vsan.html

Hardware List

Ubiquiti Networks UAP-AC-PRO: https://amzn.to/3DYJYyO

FRITZ!Repeater 3000: https://amzn.to/3jfI0R0

HPE 1820-24G (Follow-up product): https://amzn.to/3CB1HeO

HPE ProLiant MicroServer Gen10 (Follow-up product): https://amzn.to/2UsaWwI

FRITZ!Box 6660 (Follow-up product): https://amzn.to/3yUYAfF

Western Digital Black 2TB: https://amzn.to/2ZuUCOb

And yes, that’s it from this significant update which has changed my datacenter architecture once all around. If you have more questions especially about VMware vSAN, post it in the comments! 🙂

[…] irgNET-Lab 4.0 U2 […]

[…] irgNET-Lab 4.0 U2 […]